Hi All,

We recently encountered an issue where one of our applications was experiencing intermittent timeouts, causing transaction failures. With assistance from Imperva TAC. We identified that the downstream servers were sending FIN, ACK packets leading to premature connection closure. To mitigate the issue, we disabled TCP Pre‑Pooling in Imperva CWAF for that specific application and the problem was resolved.

[Details below why/what :]

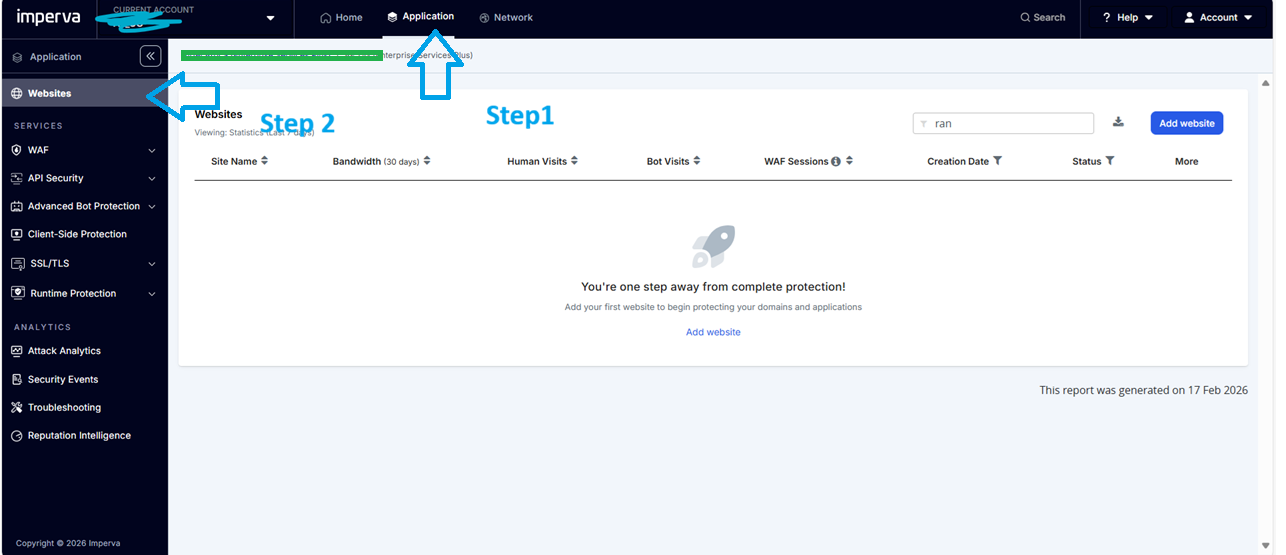

Steps : GO to Imperva CWAF Dashboard ---> Application ---> Websites

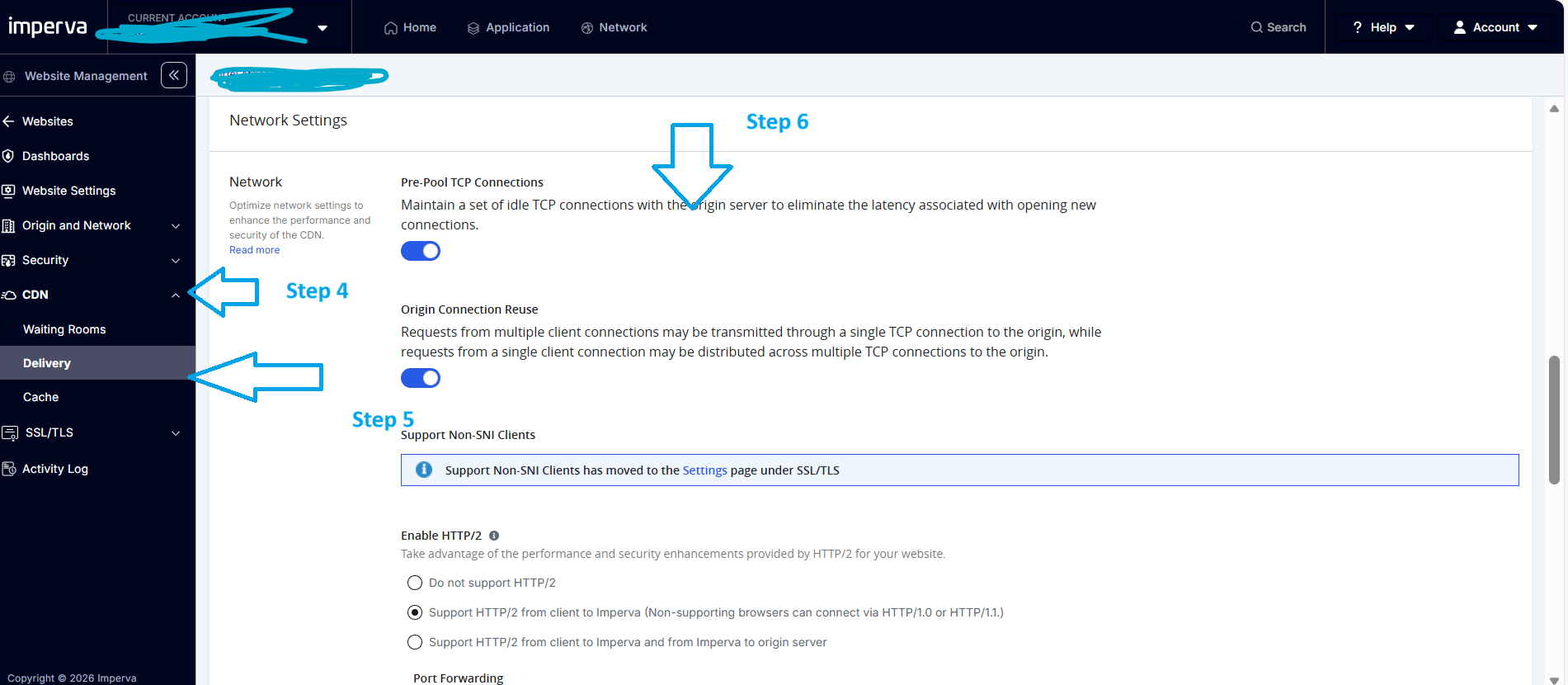

Step 3 : Please click the site , you wants to make changes then follow the below steps to disable TCP Pre-pool

Analysis and observation:

Why enabling TCP Pre Pooling can cause timeouts

1. Backend servers expect a fresh TCP handshake. With pre pooling, Imperva keeps TCP connections open to your origin before any client request arrives. Some applications or load balancers expect:

-> New TCP handshake per request

-> Specific idle timeout

-> Specific source IP behavior

When they instead receive a reused, pre opened connection they may:

-> Drop the connection

-> Reset it

-> Keep it half‑open

-> Fail to respond within Imperva's timeout window

This manifests as client-side timeouts.

2. Idle timeout mismatch between Imperva and your origin . Pre pooled connections sit idle until a request arrives. If your origin has a shorter idle timeout (e.g., 30s) than Imperva's pre pooling idle timeout (e.g., 300s), then:

-> Origin silently closes the connection

-> Imperva tries to reuse it

-> First packet from Imperva hits a dead socket

Result: timeout or TCP RST or FIN ACK. This is one of the most common causes.

3. Origin load balancer health checks vs. pre‑pooled sockets Some load balancers (F5, NGINX, HAProxy, AWS ALB) treat long‑lived idle connections as:

-> Stale

-> Unhealthy

-> Not part of the active pool

So when Imperva sends traffic through them, the LB may:

-> Drop the packet

-> Route it incorrectly

-> Reset the connection

Again, this leads to transaction timeouts.

4. Application protocol not compatible with connection reuse, If your backend uses:

-> Stateful protocols

-> Session‑bound TCP behavior

-> Custom keep‑alive logic

-> Backend‑initiated close events

then pre pooled connections break the expected flow.

Example: Some applications send a server side FIN after each response. Imperva tries to reuse the connection -> origin rejects it -> timeout.

5. Backend firewall or IDS drops long‑lived connections Firewalls often have:

-> Short TCP idle timers

-> Strict session‑tracking rules

-> Anti spoofing checks

Pre pooled connections may appear "suspicious" because:

-> They are opened without immediate data

-> They stay idle for long periods

-> They come from a fixed Imperva IP range

This leads to silent drops -> Imperva sees a timeout.

Here's the quickest way to isolate the issue. Check origin/CWAF pcap Look for:

-> TCP RST /FIN ACK

-> Idle timeout closures

-> Connection closed by peer

#CloudWAF(formerlyIncapsula)

#ContentDeliveryNetwork

------------------------------

Gopalakrishnan Manisekaran

Senior Manager

Bharti Airtel Ltd

Noida

------------------------------